🎬 An Invisible World Behind the Screen

In recent years I’ve been neck-deep in the entertainment world, more specifically in the video universe. And while from the outside it might seem like “putting movies or channels on TV”, the reality is that behind it all there’s an entire world of engineering, coordination and digital magic that you can’t see… but that makes everything work perfectly.

In my work, everything can be divided into two main families:

Linear video: live TV, with channels that broadcast at an exact time. This includes live football matches, news broadcasts, entertainment shows that air at specific times, and any content transmitted in real-time just like traditional television broadcasting.

Video on demand (VOD): the Netflix of life, where you choose what to watch and when. Here you find platforms like Disney+, HBO Max, Amazon Prime, or the movie and series catalogs from cable operators. These are basically video files stored on servers, ready to be consumed when the user requests them, with no fixed schedules or time restrictions.

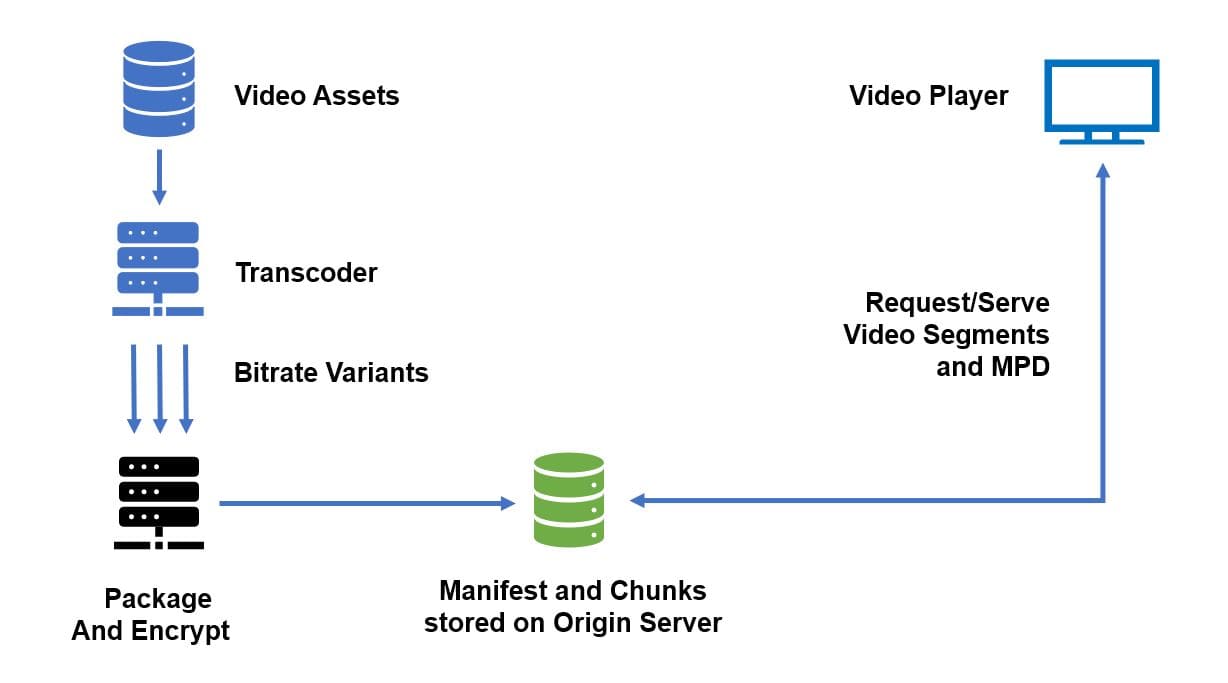

General architecture of a professional video distribution system

General architecture of a professional video distribution system

💡 Did you know?

- A 4K football match can generate 50GB of data

- Netflix processes 125 million hours of video daily

- CDNs reduce latency from 200ms to 20ms

📡 Linear Video: The Race Against Time and Latency

Everything starts at a reception and ingestion center. Here, original signals arrive from different points around the world, sent by channels like Canal 13, CNN or HBO.

These signals usually come in uncompressed formats like SDI, with extremely high quality… but also with such large data sizes that it would be impossible to send them like that to every home.

That’s where transcoders come in: specialized equipment that “translates” that signal to different profiles and qualities, so it fits the contracted plan and each client’s device.

At this point a key dilemma appears: latency.

- If you reduce it to a minimum, your client will sing the goal before their neighbor.

- If you prioritize quality, avoiding cuts and buffering or reload messages, the signal will arrive with a few more seconds of delay. And then probably your neighbor will sing the goal before you.

Latency is like a scale: each operator decides how to balance speed and quality according to their strategy. And meanwhile, there are 24/7 monitoring teams watching metrics, alarms and KPIs to detect and correct problems before they reach the viewer.

📦 Packagers, Chunks and ABR: The Digital Translation of Video

Once the signal has passed through the transcoders and we have different profiles ready, it’s time to prepare it so any device can understand it.

Here come the packagers, which take video, audio and subtitles and divide them into small fragments called chunks, normally between 2 and 10 seconds.

Why in pieces?

Because this way the device can request and play content little by little, without waiting to download it completely. This allows:

- Starting playback faster.

- Adapting to changes in internet connection without the user noticing cuts.

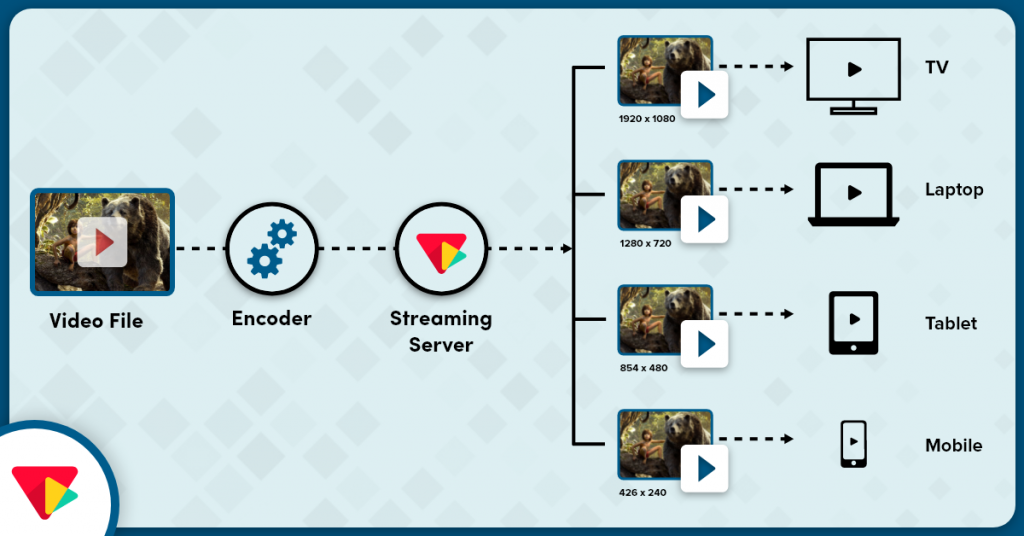

And here comes into play ABR (Adaptive Bitrate Streaming):

- The same content exists in several qualities (for example, 4K, HD, SD).

- The player constantly measures your connection and your device’s capacity.

- If everything is going well, it goes up to the best quality; if the connection drops, it reduces the resolution before you notice cuts.

💡 Real example: if you’re watching a match in 4K on your smart TV and someone in your house starts uploading a huge video, the system can automatically lower you to HD so the match continues smoothly.

Automatic quality adaptation based on user connection

Automatic quality adaptation based on user connection

In OTT (Over-The-Top TV), this is the key to being able to continue watching your series both on the subway with mobile data and at home with fiber optics.

🎬 Video On Demand (VOD): The Challenge of Massive Storage

VOD is another story. Here we store content so it’s ready when the client requests it. For years we’ve used Ericsson’s VSPP, a robust platform that delivers in multiple formats, just like a packager, but focused on stored material.

Main variants:

Classic VOD: movies or series stored that you can watch at any time.

Replay: we record linear content for a number of days so you can watch it later if you didn’t see it live.

The big challenge here is massive storage: recording 500 channels, 24 hours a day, for 30 days can equal tens of petabytes of data. Although we use compression and rules to optimize, it’s still an enormous infrastructure that must be resilient and redundant.

In most operators in Europe, this infrastructure is duplicated in different geographical sites. This means that:

- If an entire data center fails, the client continues receiving their content from another site.

- We can move traffic from one place to another for maintenance or updates without anyone noticing.

- Even in the worst case, the service remains standing and the viewer never knows something failed.

💡 Real example: if a data center in Amsterdam shuts down completely, the system redirects all traffic to Frankfurt in seconds, and the match you’re watching continues without interruptions.

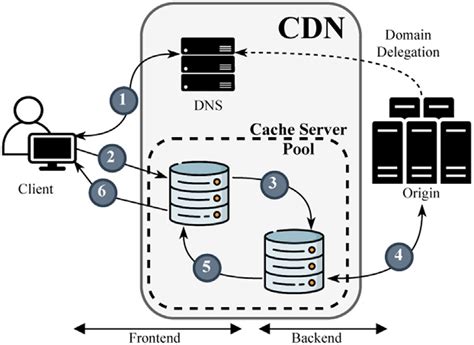

🌍 CDN: The Content Highways

The final stage is the CDN (Content Delivery Network), a global network of servers that delivers content from the point closest to the user.

If you’re in Santiago, your series doesn’t come from Amsterdam: it comes from a local server so it arrives in milliseconds. CDNs were born to distribute web pages, but today they’re the backbone of online video, allowing millions of people to watch a premiere simultaneously without saturating the network.

In a context where general internet consumes most of the bandwidth, optimizing every bit is fundamental.

How CDNs distribute content from the server closest to the user

How CDNs distribute content from the server closest to the user

🚀 Future and Trends: Towards the Cloud and Beyond

This is how I see the path ahead:

More efficiency, fewer resources

Smaller equipment, automation and AI to maintain high-quality services with less expense.

Global competition

Platforms like Netflix and Disney+ deliver content worldwide using networks that took decades to build. In Europe it was even discussed that they pay for that use, although the European Commission dismissed it.

Personalized advertising

Both on TV and streaming, ads adapt to each user using viewing data and preferences, without the user noticing.

Interactive advertising

Shoppable ads that allow buying from the screen, combining massive reach with precise measurements.

AI in distribution

From deciding the best video profile in real time to automatically optimizing advertising campaigns.

Cloud migration

More and more operators are exploring moving their encoders, packagers and even part of the CDN to the cloud.

Advantages: rapid scalability, reduction in hardware investment, operation from anywhere.

Challenges: high costs in 24/7 transmissions, added latency from transporting the signal to the cloud, need for massive bandwidth between origin and cloud.

Examples: pilots already exist in Europe where live sports channel packaging is done 100% in the cloud, with physical backup in traditional data centers to guarantee continuity.

🚀 Next 5 Years: The Immediate Future

Predictive AI for automatic optimization

Systems will learn consumption patterns and anticipate traffic peaks, automatically adjusting quality and distribution without human intervention.

Edge computing to reduce latency

Processing will move closer to the end user, reducing latency from 20ms to less than 5ms in some cases.

8K streaming mainstream

8K resolution will become standard for premium content, requiring new compression and distribution strategies.

Real-time holograms

Holographic transmissions will begin to appear at sporting events and concerts, revolutionizing the viewer experience.

🎯 In Summary: The Invisible Magic of Video

From when the signal enters a reception center until it reaches your screen, video travels a complex chain that combines speed, quality, efficiency, resilience and now also a transition towards the cloud.

And the curious thing is that, if we do our job well, you don’t notice anything: while you watch your favorite series or sing a goal, behind it all moves an entire army of systems, cables, servers and people so the experience is perfect.

💬 What Do You Think?

Did you imagine that behind every channel or movie there was so much engineering? Are you interested in knowing more about some specific aspect of the video world?

🧠 Let me know in the comments or share this article with someone who should understand how the magic of television really works.

✍️ Claudio from ViaMind

“Dare to imagine, create and transform.”

Recommended links on similar topics:

If you want to dive deeper into the world of video and streaming, I recommend these articles:

-

Netflix Tech Blog – Rebuilding Netflix Video Processing Pipeline with Microservices

How Netflix rebuilt their video processing pipeline using microservices. -

An End-to-End Pipeline Perspective on Video Streaming (arXiv)

Complete perspective of video streaming pipeline from academic research. -

Real‑Time Video Pipelines – Techniques & Best Practices (It-Jim)

Techniques and best practices for real-time video pipelines.

If you have other recommendations or want to chat about these technical topics, write to me! I love connecting with people interested in understanding how the technology we use every day really works.

-

Previous

🤖 How I Use AI in My Daily Life (And Why You Should Too) -

Next

🤖 AI & Telecom Trends: Week of August 11 - Revolution in Languages, Cloud & Assistants