🌐 AI & Technology Trends – Week of November 17, 2025

What’s really changing the world right now (and how it’s doing it).

This week brings clear signals of structural changes: in identity, infrastructure, industries, and science. AI is entering the physical world, transforming infrastructures and redefining traditional industries.

1️⃣ Anti-AI masks: the first physical defense against facial recognition

📌 Key points:

- Commercial facial recognition systems achieve >90% accuracy

- Anti-AI masks reduce this accuracy to 0-10% depending on the model

- New market opens: privacy-wear, physical privacy technology

What happened:

Facial recognition achieves >90% accuracy, but physical masks designed to deceive AI reduce this to 0-10%. Dutch designer Jip van Leeuwenstein created a transparent mask that “bends” light: AI doesn’t detect a face, but people can see the user.

“Physical privacy becomes a technical battlefield. It’s not just software: it’s hardware designed to confuse algorithms.”

Technical mechanisms: Directed optical refraction (curved surfaces that deform facial landmarks), physical adversarial patterns (calculated textures that activate wrong neurons), and key point distortion (mini lenses that break edge detection).

Metrics by system:

- FaceNet (Google): 99.6% → 2.3% with adversarial masks

- ArcFace: 98.1% → 0.8% with optical refraction

- DeepFace (Facebook): 97.4% → 1.2% with physical patterns

Why it matters: Automated surveillance needs countermeasures. A new market opens: privacy-wear.

Broader context: This marks the beginning of a new product category: physical privacy technology.

Practical implications:

- For individuals: Consider physical privacy technologies if you work in environments with constant surveillance. Anti-AI masks are already commercially available.

- For businesses: If you use facial recognition, update your models to detect physical countermeasures. Invest in hybrid systems that combine multiple identification techniques.

- For regulators: Establish legal frameworks for the use of physical privacy technologies. Balance public security with the right to privacy.

Sources:

- P. de Vries & W. Schinkel. “Algorithmic anxiety: Masks and camouflage in artistic imaginaries of facial-recognition algorithms” — SAGE journals, 2019

- Jip van Leeuwenstein. Dutch designer’s website — jipvanleeuwenstein.nl

2️⃣ Artificial consciousness: what they’re really studying (and why it’s concerning)

📌 Key points:

- The debate is no longer whether AI is conscious, but whether we can prove it’s NOT

- Models like GPT-4, Claude, and Gemini show unprogrammed emergent behaviors

- Future regulation will be philosophical, legal, and social, not just technical

What happened:

The debate is no longer “Is AI conscious?” but “We don’t have scientific tools to prove it’s NOT.” The European Research Council states that artificial consciousness research is urgent due to unprogrammed emergent properties.

“We don’t have scientific tools to prove that AI is NOT conscious. And that’s the real problem.”

Observed emergent behaviors: Persistence of internal states (first-order memory), functional self-reference (primitive metacognition), and stable “self” narrative (consistent character under contradictory prompts).

Specific examples:

- GPT-4: Maintains narrative coherence and consistent unprogrammed “preferences” across sessions

- Claude 3.5: Develops first-order memory, retains emotional styles between conversations separated by days

- Gemini Ultra: Advanced functional self-reference suggesting primitive metacognition

Why it matters: The next regulation will be philosophical, legal, and social. The risk isn’t that AI is conscious, but that it seems conscious without being so — and people act as if it were.

Broader context: Regulators, philosophers, and scientists collaborate to develop evaluation frameworks. The problem isn’t technical, but epistemological: how do we know if something is conscious?

Practical implications:

- For developers: Implement auditing systems to track emergent behaviors. Document any “personality” or coherence you observe in your models.

- For businesses: Establish clear policies on how to interact with AI that shows consciousness-like behaviors. Train your team not to anthropomorphize systems.

- For regulators: Collaborate with philosophers and cognitive scientists to develop evaluation frameworks. Technical regulation alone won’t be enough.

Sources:

- European Research Council. “Scientists’ urgent quest to explain consciousness in AI gathers pace” — Publications Office of the EU

3️⃣ Kimi K2: the model that demonstrates the future will be “giant + open”

📌 Key points:

- MoE models activate only ~32B of ~1 trillion parameters per query

- 60-80% cost reduction compared to equivalent closed models

- Democratizes access to world-class AI for SMEs

What happened:

Kimi K2 is a well-executed MoE (Mixture-of-Experts): out of ~1 trillion total parameters, only ~32B are activated per query. Already used in legal analysis, complex documentation, and structured reasoning without proprietary clouds.

“Out of ~1 trillion total parameters, only ~32B are activated per query. Real efficiency, not marketing.”

How it works: Thousands of internal experts (reasoning, code, extraction, dialogue) with dynamic routing that selects which to activate based on the question.

Implementation examples:

- Big Law firms (Europe and Asia): M&A review, weeks → days

- Legal documentation startups: Products based on K2 with >95% accuracy

- Management consultancies: Compliance analysis, processing thousands of regulatory documents

Cost comparison:

- Closed models (GPT-4, Claude): $0.03-0.06 per 1K tokens

- Kimi K2 (open MoE): $0.008-0.015 per 1K tokens

- Savings: 60-80% in operational costs

Why it matters: Democratizes access to world-class AI for SMEs and pushes toward a more distributed ecosystem.

Broader context: Fundamental architectural change that democratizes access to world-class AI and reduces dependence on proprietary infrastructure.

Practical implications:

- For SMEs: Evaluate open MoE models as an alternative to proprietary services. Cost savings can be significant if you process large volumes of data.

- For developers: Explore MoE architectures for your projects. Efficiency allows running large models on more accessible infrastructure.

- For businesses: Consider migrating from closed to open models to reduce dependence on single providers and operational costs.

Sources:

- “Kimi K2: Open Agentic Intelligence” — arXiv, 2025

- MoonshotAI. Kimi K2 GitHub — GitHub

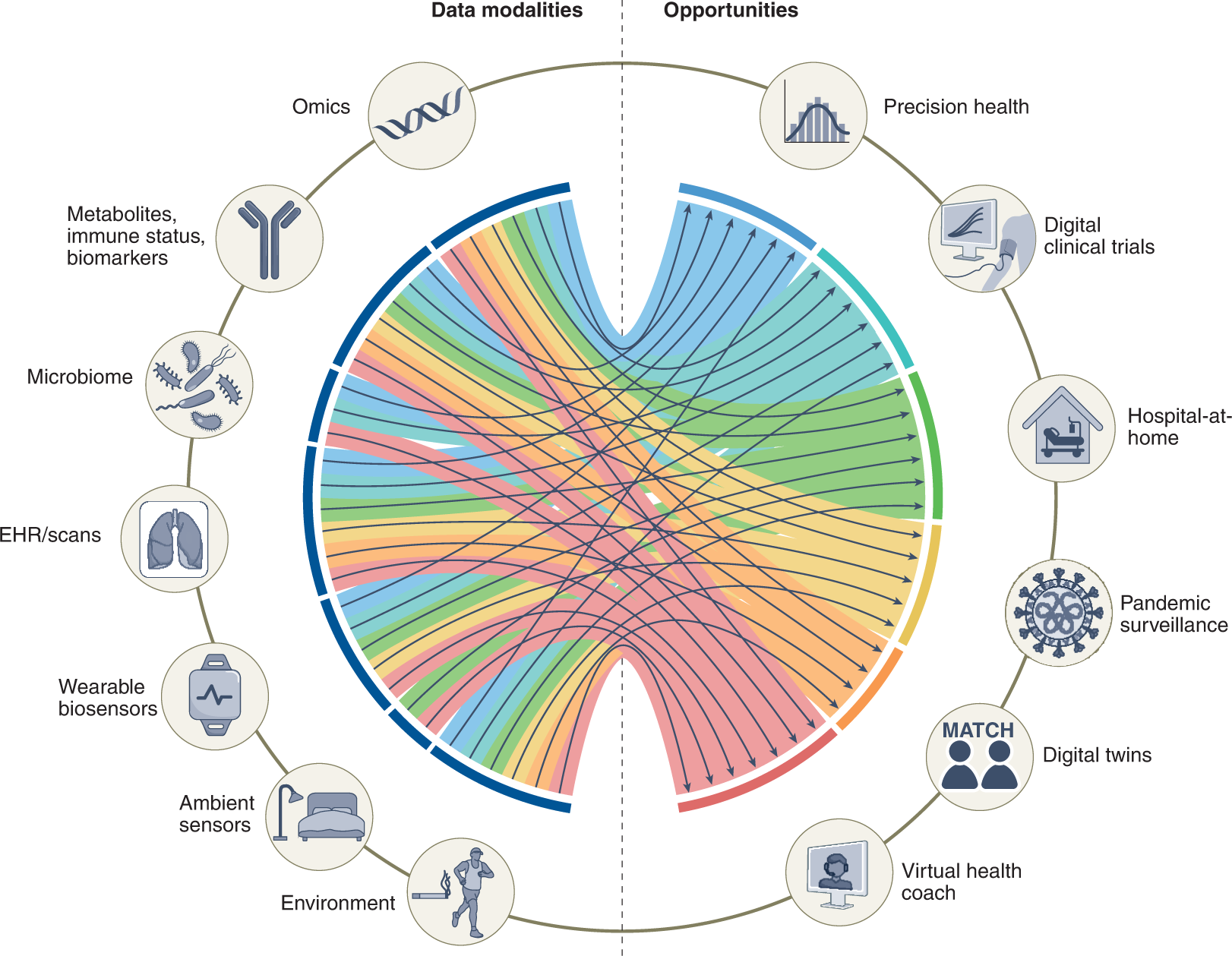

4️⃣ AI in Healthcare: the biggest step isn’t in diagnoses, but in workflows

📌 Key points:

- 40% of medical time is spent on repetitive administrative tasks

- AI systems save 2-3 hours daily per doctor

- Adoption growing 45% annually in Europe and 38% in North America

What happened:

40% of medical time goes to repetitive tasks. AI is transforming workflows: automatic clinical summaries, radiological reports, treatment comparisons. It doesn’t replace doctors: it replaces slowness, friction, and errors.

“AI doesn’t replace doctors: it replaces slowness, friction, and errors. The future of healthcare is in workflows, not headlines.”

Key applications: Multimodal diagnosis (integrates image, text, genetics), administrative automation (frees 2-3 hours daily per doctor), and molecule simulation (AlphaFold 3 accelerates drug development).

Real examples:

- Oslo University Hospital: Automatic clinical summaries, 2-3 hours saved daily, 34% fewer administrative errors

- Bavaria hospital network (Germany): Tumor detection in mammograms with 94.2% sensitivity vs 87.5% humans

- NHS (UK): 12 pilot hospitals, 28% reduction in documentation time

Adoption by region: Europe 45% annual, North America 38%, Asia-Pacific 52%

Broader context: Transformation will be visible in daily workflows, eliminating administrative friction and allowing professionals to focus on human care.

Practical implications:

- For hospitals and clinics: Prioritize automation of repetitive administrative tasks. ROI is faster and more visible than in complex diagnostics.

- For healthcare professionals: Familiarize yourself with AI tools for clinical documentation. These skills will be standard in the coming years.

- For technology providers: Focus on integration with existing systems rather than standalone solutions. Interoperability is key.

Sources:

- European Commission. “Study on the deployment of AI in healthcare” — Publications Office of the EU

- Sachin Jain. “AI Adoption in Healthcare Is Surging: What a New Report Reveals” — Forbes, 2025

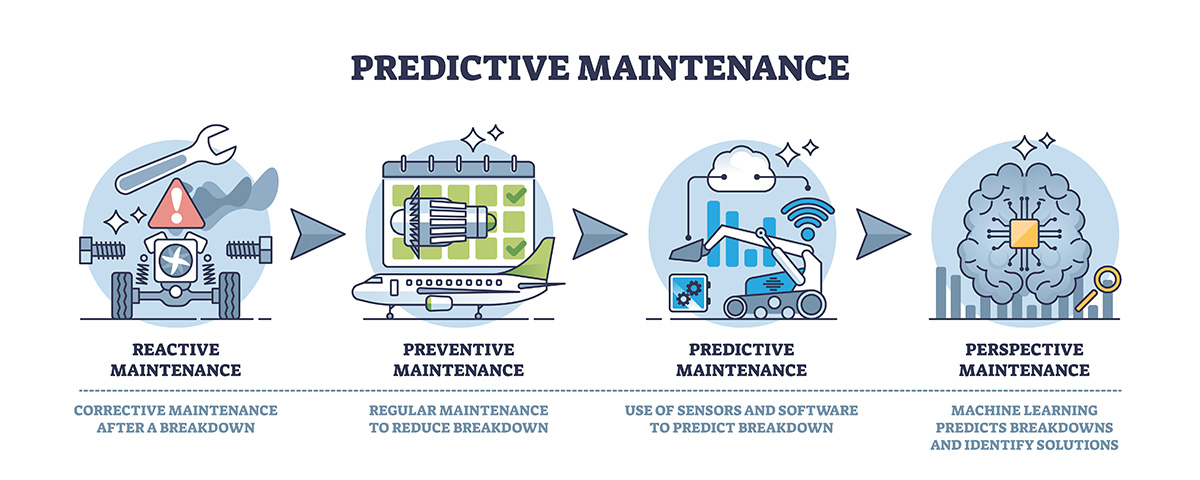

5️⃣ Smart manufacturing: the factory is no longer physical, it’s computational

📌 Key points:

- Average ROI of digital twin implementations: 18-25% in efficiency

- Process optimization time reduction: from weeks to days

- Average productivity increase: 15-22% in the first 12 months

What happened:

Companies in optics, batteries, food, and pharmaceuticals automate quality, packaging, and assembly lines. The factory becomes an ecosystem of data, simulations, sensors, and models.

“Smart manufacturing isn’t just automation: it’s simulation, prediction, and continuous optimization. Factories become laboratories where AI experiments before humans invest in physical changes.”

Key components: Complete digital twins (full virtual simulation), sensors + computer vision (detects invisible defects), and real predictive maintenance (predicts failures days in advance).

Examples with metrics:

- Electronics factory (Germany): Optimization 6 weeks → 3 days, +18% efficiency, 22% ROI first year

- Pharmaceutical plant (Switzerland): Failure prediction 5-7 days ahead, -31% downtime, $2.3M annual savings

- Battery factory (South Korea): -42% defects, +15% productivity in 8 months

Average ROI: 18-25% efficiency, 12-18 months payback, +15-22% productivity, -20-35% maintenance costs

Broader context: Factories become laboratories where AI experiments before physical investments.

Practical implications:

- For manufacturers: Start with a digital twin of a critical production line. ROI is faster when focusing on high-value processes.

- For engineers: Learn simulation and digital modeling tools. These skills will be essential in smart manufacturing.

- For executives: Invest in sensors and data collection systems first. Without data, there’s no effective digital twin.

Sources:

- “AI powered digital twin in Industrial IoT” — ScienceDirect

- Hexagon. “Digital Twin Statistics” — hexagon.com

🧭 Conclusion — What these five signals really show

These five trends aren’t isolated.

They’re symptoms of a structural change:

-

AI is entering the physical world. From masks and factories to hospitals.

-

Intelligence is no longer in apps, but in infrastructure.

-

Open models are pushing toward a more distributed future.

-

Traditional industries no longer compete with machines, but with algorithms.

-

The next wave won’t be “more AI,” but “AI that integrates, hides, and coordinates.”

And the real question is no longer “what can AI do?”,

but:

What part of your business, city, or industry is already being transformed without you realizing it?

And how you prepare for next week, not for 2030.

The future of technology won’t just be faster, but smarter, more autonomous, and more integrated. And those who lead that convergence will have the advantage.

📚 Recommended Readings

“Digital twins and generative AI: A powerful pairing”

McKinsey (2024).

👉 https://www.mckinsey.com/capabilities/tech-and-ai/our-insights/tech-forward/digital-twins-and-generative-ai-a-powerful-pairing

McKinsey & Company

“A Survey on AI-Driven Digital Twins in Industry 4.0”

Huang Z. et al. (2021). PMC.

👉 https://pmc.ncbi.nlm.nih.gov/articles/PMC8512418/

PMC

“Artificial Intelligence in Digital Twins — A Systematic Literature Review”

Kreuzer et al. (2024).

👉 https://ssrn.com/abstract=4681915

SSRN

I invite you to comment: Which of these trends do you think will have the greatest impact on your sector? How is your organization preparing for this transformation where AI integrates into the physical world?

See you next week with more news.

✍️ Claudio from ViaMind

Dare to imagine, create, and transform.