🔍 What does AI have to do with how we watch video?

Until a few years ago, talking about AI in the audiovisual industry sounded like science fiction. Today, from how a video is encoded to how it’s recommended on your screen, AI is in the entire pipeline. This transformation doesn’t just impact platforms like YouTube or Netflix, but also telcos, broadcasters, infrastructure providers, and content creators.

AI is not an add-on. It’s a new gear in the machine.

⚙️ AI in Professional Broadcasting: Behind the Scenes

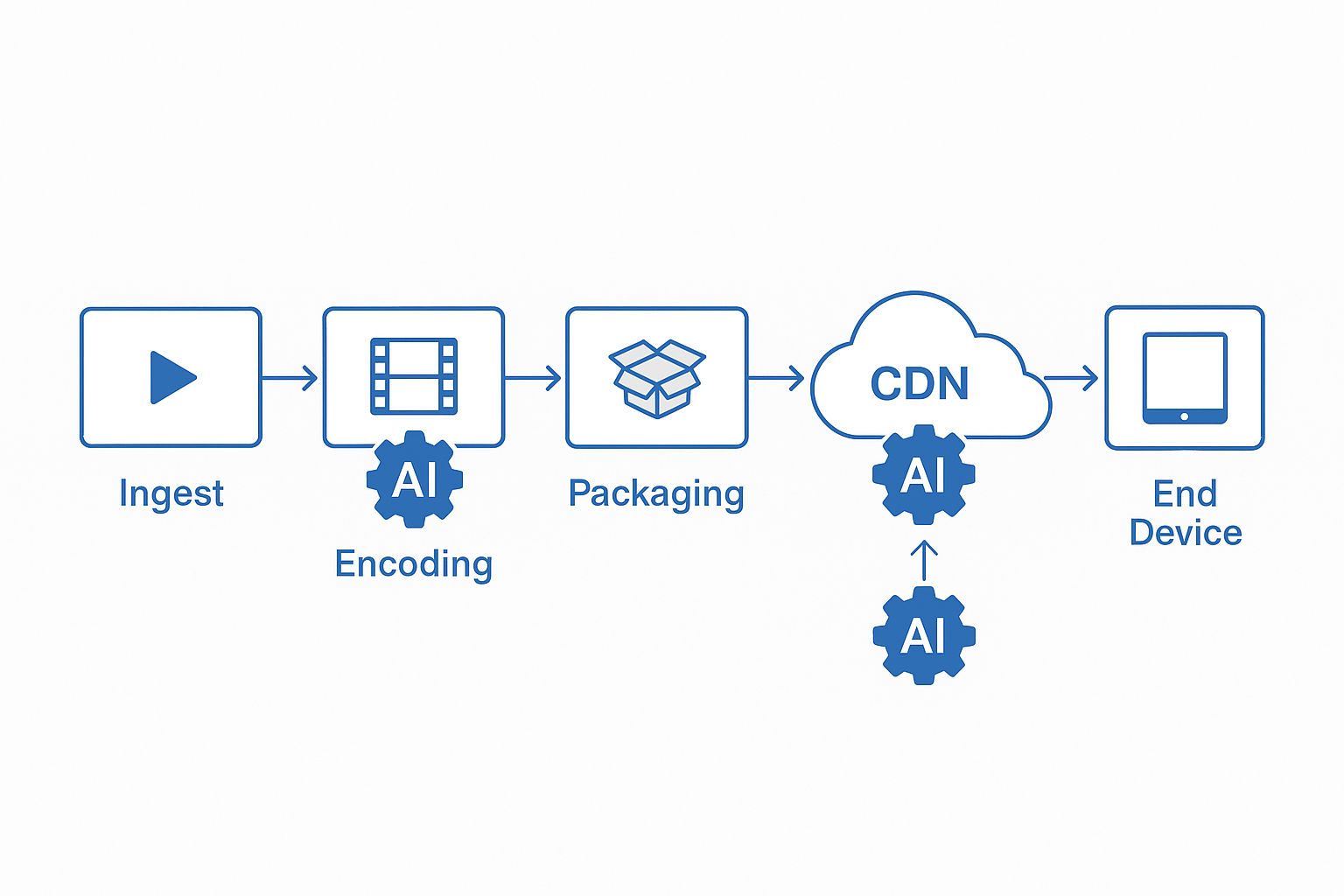

The video transmission chain is complex: encoding, packaging, transport, quality monitoring, distribution. Each link now has optimization potential with AI.

Real cases:

🎞️ Intelligent encoding: Companies like Ateme, Bitmovin, and Harmonic use neural networks to dynamically adjust bitrate based on content (static scenes vs. action), reducing costs without losing quality.

🛰️ Predictive distribution: CDNs like Akamai and Cloudflare apply machine learning to anticipate traffic peaks, reroute data, and reduce latency.

📉 Automated QoE monitoring: Verizon, Vodafone, and Deutsche Telekom use AI to detect signal errors, frame drops, or desynchronization before they impact the user.

🔁 Real-time upconversion: Google and NVIDIA use AI to automatically improve resolution (from 720p to 4K) or increase framerate (from 30 to 60 fps), especially useful in gaming and sports.

📝 My personal experience in this transition

Over the past five years, I’ve worked directly with transcoders, packaging systems, and CDNs, and I can say that AI’s impact is already visible in how we manage quality and efficiency across the entire network.

Today, virtually all vendors offer “AI-integrated video solutions.” Some do it better than others, but the reality is that we’re not yet at the point where AI completely handles packaging, although it’s starting to dominate key areas like streaming traffic routing or adaptive bitrate management.

It’s only a matter of time before, in a few years, AI autonomously manages the complete video cycle —from origin to end client— optimizing each variable of the environment in real-time.

What impacts me most, however, isn’t the technical aspect, but the ethical and social one. We’ve already seen cases of bullying with AI-generated fake videos, and I’m concerned about the point we could reach: a deep loop where we can’t distinguish if what we see is real or generated.

Surely it will be another AI that tells us what’s true and what’s not… but honestly, I fear it will also be manipulated —as media have been for decades with covert propaganda—.

AI in video doesn’t just challenge us as technicians. It challenges us as a society.

📺 From Backend to User: AI that Sees, Hears, and Decides

Beyond the backend, AI also acts in real-time in the viewer’s experience. And many times, without us noticing.

Visible trends:

⚽ Autonomous cameras: Vision AI like Pixellot or Veo film matches without cameramen, using AI to follow plays, focus, and generate highlights.

🎯 Emotional recommendations: Platforms like TikTok or Netflix already experiment with AI that analyzes your emotional state and context (time, device, history) to better predict what you’ll like.

🌍 Live translation and subtitles: Amazon and YouTube implement multilingual models that translate broadcasts in real-time with automatic synchronization.

🚫 Automated content moderation: Twitch and Facebook use AI to detect offensive language or violent images live, without human intervention.

🎬 AI Video Creation: Artificial Producers

This is where things become truly disruptive. Not just optimizing: creating content.

Current technical examples:

Runway (Gen-2): Generates short videos from text or images, used in advertising, fashion, and music.

Pika Labs: Allows creating videos with realistic animations from simple prompts, ideal for marketing or storytelling.

OpenAI Sora: Not yet publicly launched, but has shown surprising results creating natural scenes from text (“A woman walking in the rain in Tokyo”).

Synthesia and HeyGen: Generate virtual presenters with voice, gestures, and lip sync in multiple languages.

WSC Sports: Produces automatic highlights for sports leagues like NBA or Bundesliga in seconds after each match.

🤯 What changes for the industry?

Hybrid roles: Video engineers will need to collaborate with data scientists and creatives. The “Media AI Ops” profile is born.

Visual disinformation: Fake news will no longer be just text or images, but videos indistinguishable from reality.

Cost vs. ethics: Do you edit a video with AI because you can… or because you must? What if it’s cheaper but less transparent?

🎭 The Double Edge: Fabricated Realities

The question is no longer if we can generate realistic fake content. The question is: Who will control that capability?

A child bullying with a deepfake? A politician generating fake speeches? A company manipulating customer testimonials?

The answer, again, will be AI… but who trains that AI?

🎥 Related Video

💬 What do you think?

Are you excited about what’s coming or does it generate distrust? Are you already using any AI tools in your video projects?

🧠 Let me know in the comments or share this article with someone who should read this before it’s too late.

✍️ Claudio from ViaMind

“Dare to imagine, create, and transform.”

Recommended links on similar and fascinating topics:

Here are some recommended articles and resources if you want to know more about AI in video and streaming:

-

Lights, Camera, Algorithm! Runway AI Joins the Production Crew (Economic Times)

How Runway is collaborating with studios like Netflix and Disney to automate creative processes through AI video generation. A realistic and optimistic look at the future of cinema and advertising. -

Three AI Trends Reshaping the Future of Media and Entertainment (TV Technology)

Summary from industry experts on how AI is influencing the audiovisual industry: from autonomous agents that automate the production chain to generative AI as a new monetizable resource. -

This New AI Tool Wants to Work With Filmmakers — Not Replace Them (Marey)

The story behind “Marey”, an AI that seeks to empower independent filmmakers rather than replace them. An ethical and collaborative vision of AI video generation.

If you have other recommendations or want to discuss these topics, write to me! I love connecting with curious minds.

-

Previous

I Tested the World's Most Powerful AIs: Here's What No One Tells You -

Next

🤖 How I Use AI in My Daily Life (And Why You Should Too)